Daemons are background processes in distributed framework with each daemon performing a specific task.

Daemons are background processes in distributed framework with each daemon performing a specific task.

Data bit for the day – 14th August 2019

Data latency is the time taken to input the data into system or get the data present in the system.

Data latency is the time taken to input the data into system or get the data present in the system.

Data bit for the day – 24th June 2019

Understanding Apache Spark

In my last blog post I had discussed about data, now let us understand a modern tool to process huge datasets(BigData) so as to extract insights from data.

Apache Spark – A fast and general engine for large-scale data processing. Spark is a more sophisticated version of data processing engine compared to engines using MapReduce model.

One of the key feature with Apache Spark is Resilient distributed datasets(RDD’s). These are data structures available in Spark.

Spark can run on Hadoop YARN cluster. The biggest advantage to keep large datasets in memory adds to the capability of Spark over MapReduce.

Applications types that find Spark’s processing model helpful are:

- Iterative algorithms

- Interactive analysis

Other areas which make Spark more adoptable are:

Spark DAG(Directed Acyclic Graph) – This component of the engine helps to convert variable number of operations into a single job.

User Experience – Spark makes user experience smooth by having a plethora of API’s to perform data processing tasks.

Spark has API’s in these languages: Scala, Java, Python and R.

Spark programming comprising of the Spark shell(also known as Spark CLI or Spark REPL) makes it simple to work on datasets. REPL stands for read-eval-print loop.

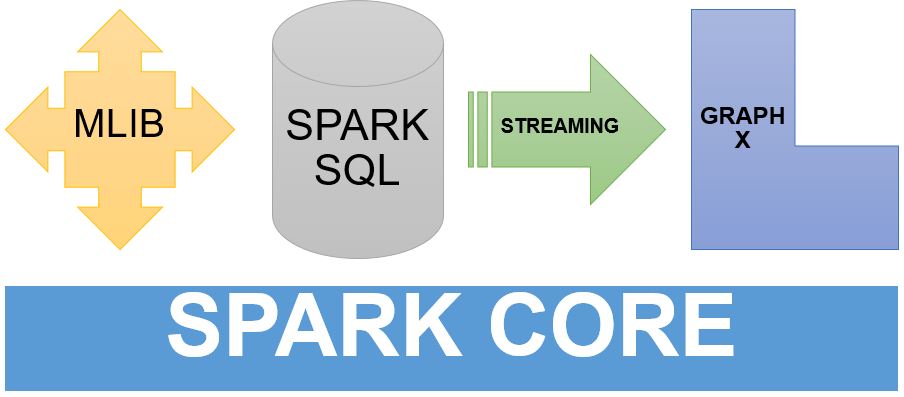

Spark on the other hand provides modules for:

- Machine learning(MLib) – Provides a framework for distributed machine learning.

- Graph processing(Graphx) – Provides a framework for distributed graph processing.

- Stream processing(Spark Streaming) – Helpful for streaming(real-time) analytics. Data ingestion takes place in mini-batches and RDD transformations are performed upon these mini-batches.

- SQL(Spark SQL) – Provides data abstraction known as SchemaRDD which supports structured and semi-structured data.

These components operate on Spark core. Spark core provides platform for in memory computing and referencing datasets in external storage systems.

Companies that are using Apache Spark – Google, Facebook, Twitter, Amazon, Oracle, et al.

Spark services are provided on notable cloud platforms such as Google Cloud Platform(GCP), Amazon Web Services(AWS) and Microsoft Azure.

Source: Apache Spark